Experimental Design & Common Pitfalls of Machine Learning in Finance

The first lecture from the Experimental Design and Common Pitfalls of Machine Learning in Finance series addresses the four horsemen that present a barrier to adopting the scientific approach to machine learning in finance.

The second lecture focuses on a protocol for backtesting and how to avoid the seven sins of backtesting. By implementing the research protocol outlined in these articles, an investment manager can avoid making the seven common mistakes when backtesting and building quant models.

This lecture series is a sample of our weekly reading group, held every Friday and recorded for our members to enable on-demand viewing. Papers are carefully selected and reviewed by experts in the field, and are accompanied by detailed annotations that provide insight and context.

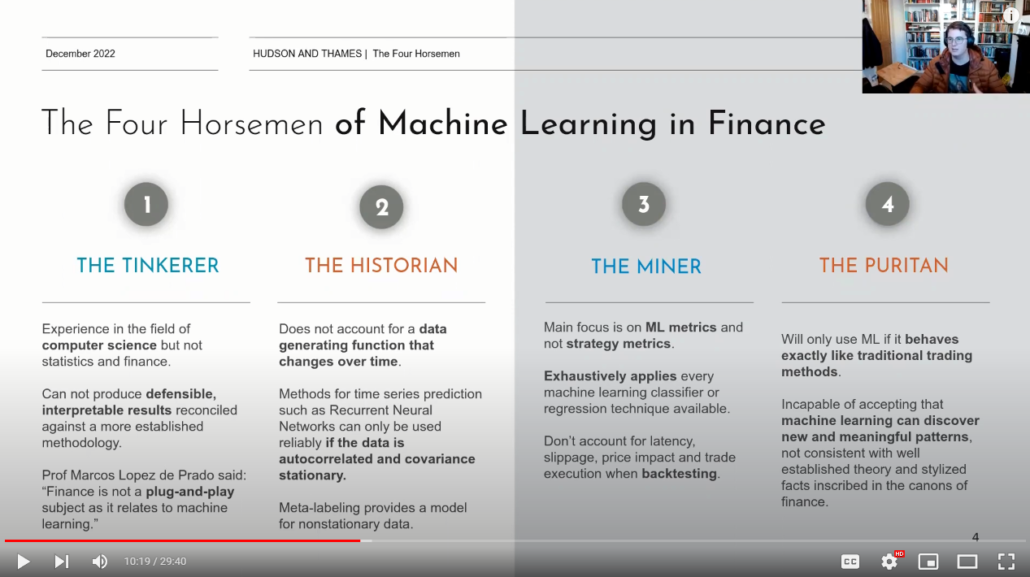

The Four Horsemen of Machine Learning in Finance

Machine learning has been used in the financial services industry for over 40 years, but it is only recently that it has become more widespread in investment management and trading. Machine learning offers a more general framework for financial modeling than its linear parametric predecessors.

Despite its potential, there are barriers to the adoption of machine learning in the financial industry. These barriers are often due to the interdisciplinary nature of the field. Based on discussions with experts and the authors’ experience using both machine learning and traditional quantitative finance in investment banks, asset management, and securities trading firms, this article identifies the major red flags and provides guidelines and solutions to avoid them. Examples using supervised learning and reinforcement in investment management and trading are provided to illustrate best practices.

Seven Point Backtesting Protocol

Machine learning has the potential to revolutionize the world of investment management. However, there are several limitations to consider when applying these techniques in the world of finance. One of the biggest challenges is the availability of data. In many cases, machine learning applications require large amounts of data to be effective, but in the world of finance, data availability is often limited.

This is especially true for longer-term investment strategies, where data availability can be a major constraint. This means that it’s important for investors to carefully choose the right applications for their models, and to be cautious when using these tools.

In addition to the challenges posed by data availability, the complexity of capital markets also presents challenges for machine learning. Capital markets are influenced by human behavior, which can be unpredictable and hard to model. This means that machine learning techniques need to be carefully applied in order to be effective in finance.

By carefully choosing the right applications and following a rigorous research protocol, investors can make the most of the potential of machine learning in finance. In this article, the authors develop a research protocol that applies both to the application of machine learning techniques and to quantitative finance in general.

The Protocol

As per Exhibit 2, pg.16:

-

- Research Motivation

-

- Does the model have a solid economic foundation?

- Did the economic foundation or hypothesis exist before the research was conducted?

-

- Multiple Testing and Statistical Methods

-

- Did the researcher keep track of all models and variables that were tried (both successful and unsuccessful) and are the researchers aware of the multiple-testing issue?

- Is there a full accounting of all possible interaction variables if interaction variables are used?

- Did the researchers investigate all variables set out in the research agenda or did they cut the research as soon as they found a good model?

-

- Data and Sample Choice

-

- Do the data chosen for examination make sense? And, if other data are available, does it make sense to exclude them?

- Did the researchers take steps to ensure the integrity of the data?

- Do the data transformations, such as scaling, make sense? Were they selected in advance? And are the results robust to minor changes in these transformations?

- If outliers are excluded, are the exclusion rules reasonable?

- If the data are winsorized, was there a good reason to do it? Was the winsorization rule chosen before the research was started? Was only one winsorization rule tried (as opposed to many)?

-

- Cross-Validation

-

- Are the researchers aware that true out-of-sample tests are only possible in live trading?

- Are steps in place to eliminate the risk of out-of-sample “iterations” (i.e., an in-sample model that is later modified to fit out-of-sample data)?

- Is the out-of-sample analysis representative of live trading? For example, are trading costs and data revisions taken into account?

-

- Model Dynamics

-

- Is the model resilient to structural change and have the researchers taken steps to minimize the overfitting of the model dynamics?

- Does the analysis consider the risk/likelihood of overcrowding in live trading?

- Do researchers take steps to minimize the tweaking of a live model?

-

- Complexity

-

- Does the model avoid the curse of dimensionality?

- Have the researchers taken steps to produce the simplest practicable model specification?

- Is an attempt made to interpret the predictions of the machine learning model rather than using it as a black box?

-

- Research Culture

-

- Does the research culture reward quality of the science rather than finding the winning strategy?

- Do the researchers and management understand that most tests will fail?

- Are expectations clear (that researchers should seek the truth not just something that works) when research is delegated?

Seven Sins of Quantitative Investing

Quant investing has been made to seem easy by the rise of computing power and the availability of off-the-shelf backtesting software. However, there are common mistakes that investors tend to make when they perform backtesting and build quant models. In this paper, the authors discuss the seven biases or “sins” that are commonly found in quantitative modeling.

Some of these biases may be familiar to readers, but they may still be surprised by the impact they can have. The other biases are so common in academia and practitioners’ research that they are often taken for granted.

This research has a few unique features that are not often seen in other places. We discuss when to remove outliers and when not to, various data normalization techniques, the issues of signal decay, turnover, and transaction costs, the optimal rebalancing frequency, and the asymmetric factor payoff patterns. We also address the impact of short availability on portfolio performance and answer the question of how many stocks should be held in a portfolio. We review the tradeoffs of various factor weighting and portfolio construction techniques, and compare traditional active portfolio management with the new trend of smart beta and factor investing.

Finally, we provide a hands-on tutorial on how to avoid the seven sins when building a multi-factor model and portfolio, using a real-life example. Quant investing may seem easy, but it is important to avoid these common pitfalls in order to succeed.