Copula for Statistical Arbitrage: A Practical Intro to Vine Copula

by Hansen Pei

Join the Reading Group and Community: Stay up to date with the latest developments in Financial Machine Learning!

This is the fourth article of the copula-based statistical arbitrage series. You can read the previous three articles focused on pairs trading:

LEARN MORE ABOUT PAIRS TRADING STRATEGIES WITH “THE DEFINITIVE GUIDE TO PAIRS TRADING”

Introduction

Copula is a great statistical tool to study the relation among multiple random variables: By focusing on the joint cumulative density of quantiles of marginals, we can bypass the idiosyncratic features of marginal distributions and directly look at how they are “related”.

Indeed, traders and analysts have been using copula to exploit statistical arbitrage under the pairs trading framework for some time, and we have implemented some of the most popular methods in ArbitrageLab. However, it is natural to expand beyond dealing with just a pair of stocks: There already exist a great amount of competing stat arb methods alongside copula, thinning the potential alpha. It is also intuitive for humans to think about relative pricing among 2 stocks, whereas for higher dimensions it is not so easy, and left great opportunities for quantitative approaches.

Copula itself is not limited to just 2 dimensions. You can expand it to arbitrarily large dimensions as you wish. The disadvantage comes from the practicality side: originally when probabilists created copula, in order to do further analysis theoretically they focus on a very small subset of copulas that have strict structural assumptions about their mathematical form. In reality, when there are only 2 dimensions, under most cases you can still model a pair of random variables reasonably well with the existing bivariate copulas. However when it goes to higher dimensions, the copula model becomes quite rigid and tends to lose a lot of useful details.

Therefore, vine copula is invented exactly to address this high dimensional probabilistic modeling problem. Instead of using an N-dimensional copula directly, it decomposes the probability density into conditional probabilities, and further decomposes conditional probabilities into bivariate copulas. To sum up:

The idea is that we decompose the high dimensional pdf into bivariate copulas (densities) and marginal densities.

Let’s start with the conditional density.

Simple Examples

Conditional Density and Bivariate Copula

At first let’s see how to relate a conditional density to a copula density. Suppose we have continuous random variables ![]() and their realizations

and their realizations ![]() . They have CDFs respectively as

. They have CDFs respectively as ![]() , and PDFs respectively as

, and PDFs respectively as ![]() ,

, ![]() . We can find their joint probability density function from conditional probability:

. We can find their joint probability density function from conditional probability:

![]()

Equivalently we can use ![]() to denote probability density as follows:

to denote probability density as follows:

![]()

Now we focus on ![]() . Notice that

. Notice that

![]()

(1)

Therefore we arrive at the key relation we will use over and over:

(2)

Trivially if we consider the probability subspace conditioned on some other random variable ![]() :

:

(3)

where ![]() , and

, and ![]() is a bivariate copula density but the two legs of random variables are conditioned on

is a bivariate copula density but the two legs of random variables are conditioned on ![]() (i.e., think about

(i.e., think about ![]() as the background information.) Remember the goal is to decompose probability density into bivariate copula and marginal densities. The example above is trivial in the sense that it has only 2 dimensions.

as the background information.) Remember the goal is to decompose probability density into bivariate copula and marginal densities. The example above is trivial in the sense that it has only 2 dimensions.

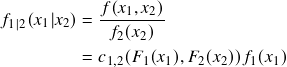

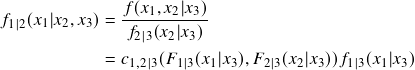

3D Decomposition

Now if we go to 3 dimension for ![]() , there are 6 ways to decompose using conditional probabilities:

, there are 6 ways to decompose using conditional probabilities:

(4)

We will not bore you with all the tedious expansions, and let’s focus on the specific decomposition in the first line ![]() to write it in terms of bivariate copula and marginal pdf:

to write it in terms of bivariate copula and marginal pdf:

![]()

![]()

Here ![]() can be further decomposed as

can be further decomposed as

![]()

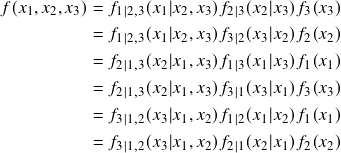

Collect all the above together and clean it up we have the following nice structure

(5)

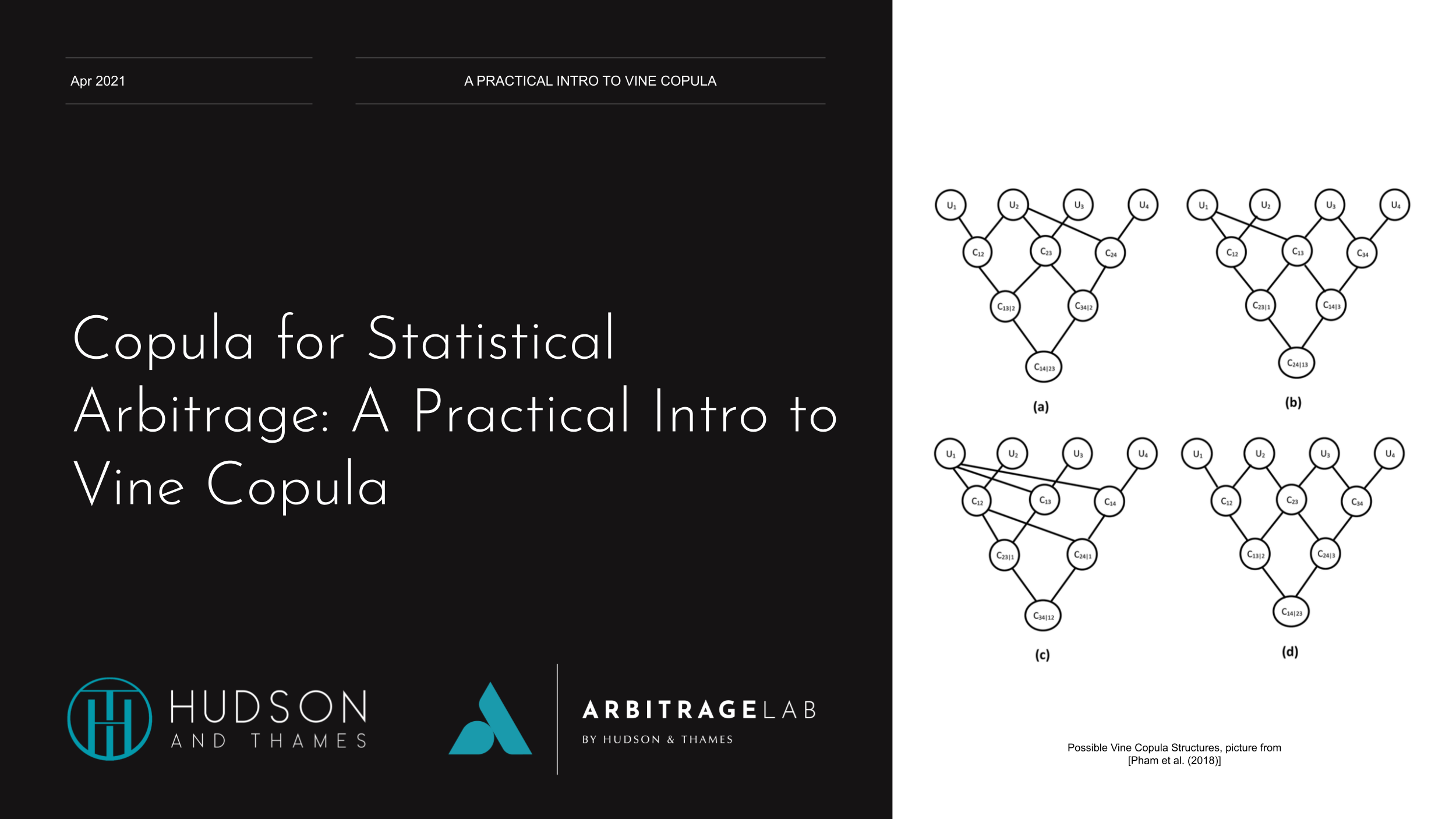

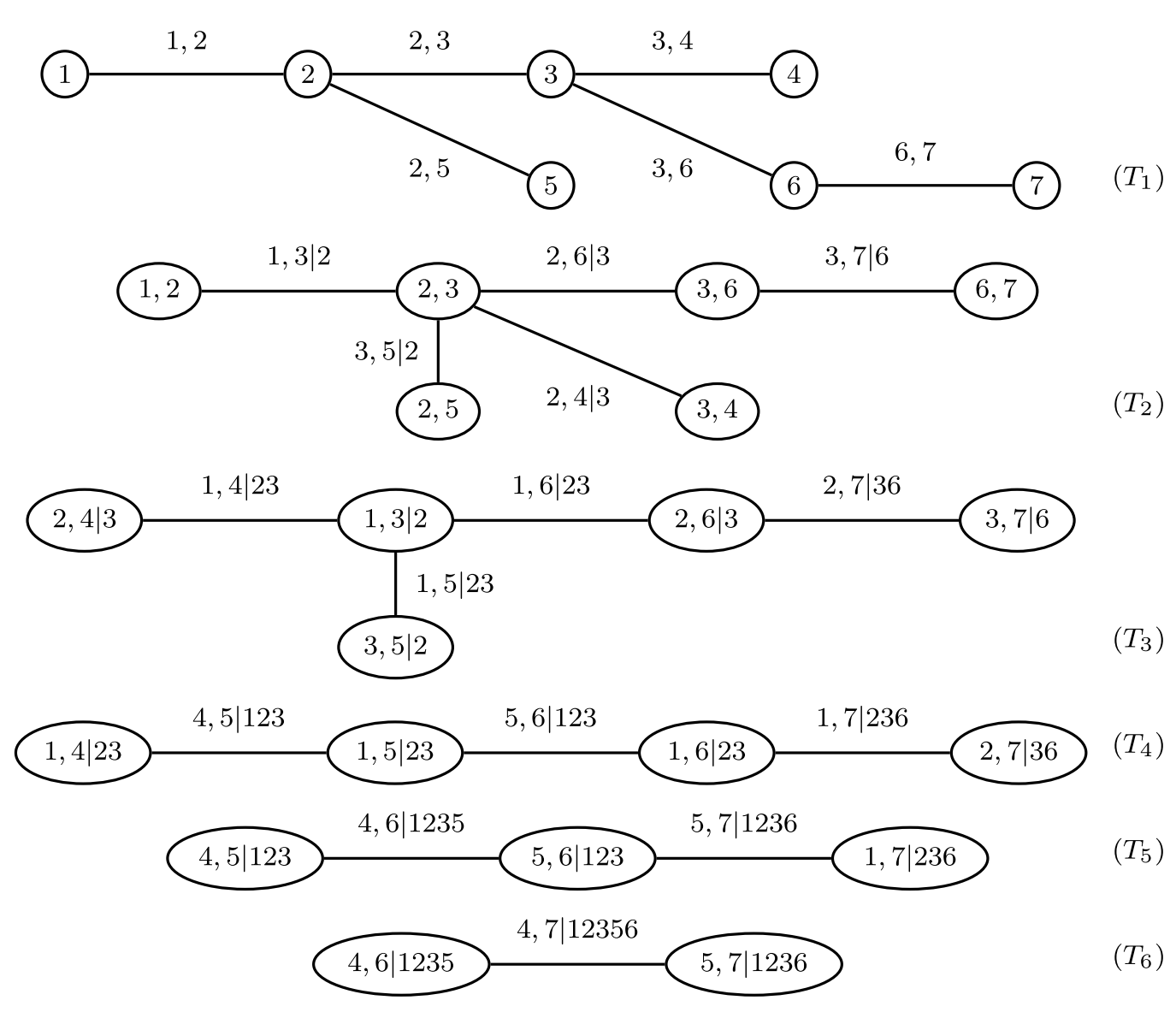

It turns out that this decomposition can be visualized nicely in a graph structure as follows, as suggested by [Bedford and Cooke (2002)]:

Fig 1: A vine copula tree structure for 3 random variables.

Here every node stands for either joint density or marginal density, and bivariate copulas are used to connect the nodes. You can visualize them as layers of trees and the leaves are on the top. All the leaves stand for marginal density of each random variable. This relation stays true for an arbitrary amount of random variables. Here we have 3 layers of the tree. This is a vine copula itself.

Formal Definitions

Vine Copula Types

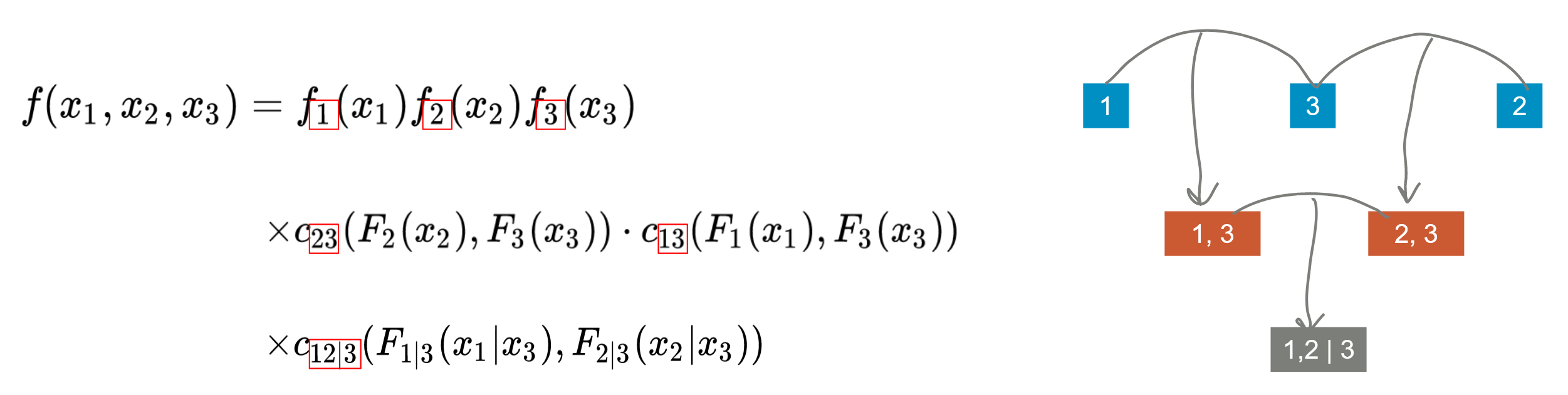

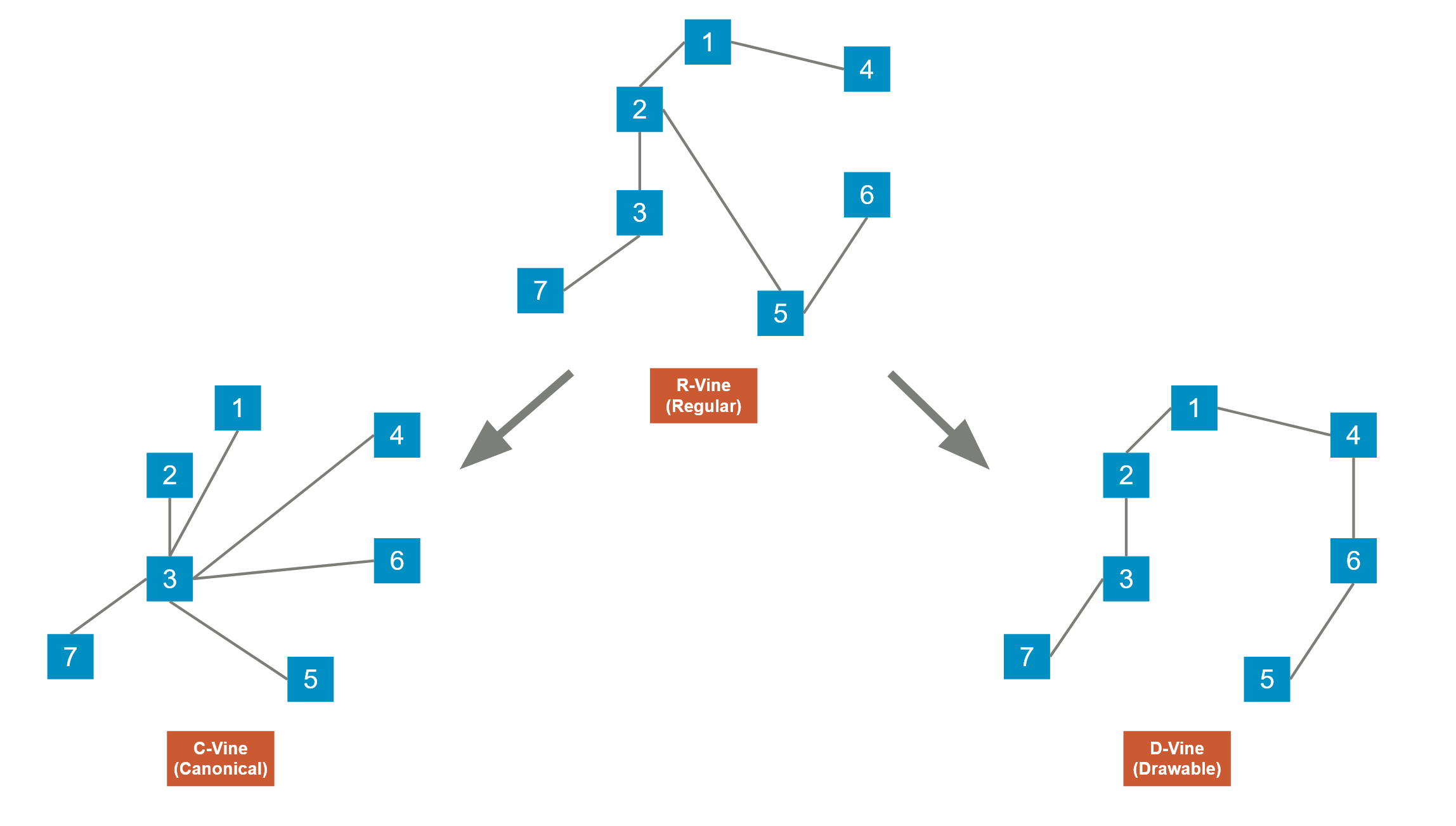

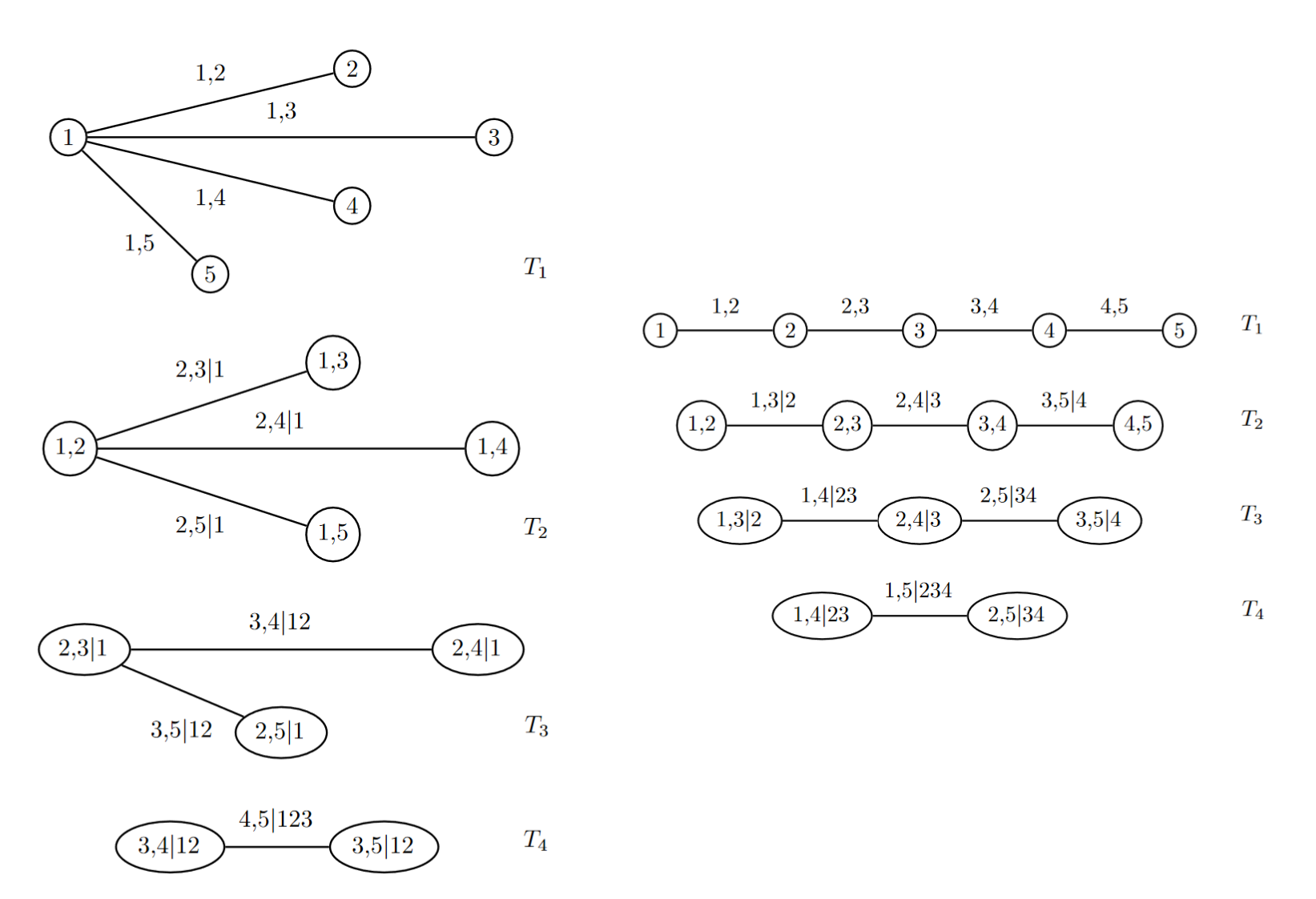

Every vine copula has a Regular Vine structure (R-vine). And there are 2 specific subgenres of R-vine called Canonical Vine and Drawable Vine. Those structures hold true for every layer of the tree. They can be visualized as follows (for every layer of the tree):

Fig 2: R-vine, C-vine and D-vine.

For an R-vine, for each level of the tree, it needs to satisfy the following conditions:

- Each layer of the tree must have

edges for

edges for  nodes, and all the nodes in this layer must be connected (i.e., you can travel from any node to any other node via edges in finite steps).

nodes, and all the nodes in this layer must be connected (i.e., you can travel from any node to any other node via edges in finite steps). - Proximity condition: Every edge will contribute to a joint density in the next layer. For example in Fig 1, at layer 1, node 1 and node 3 makes a (1,3) node in layer 2.

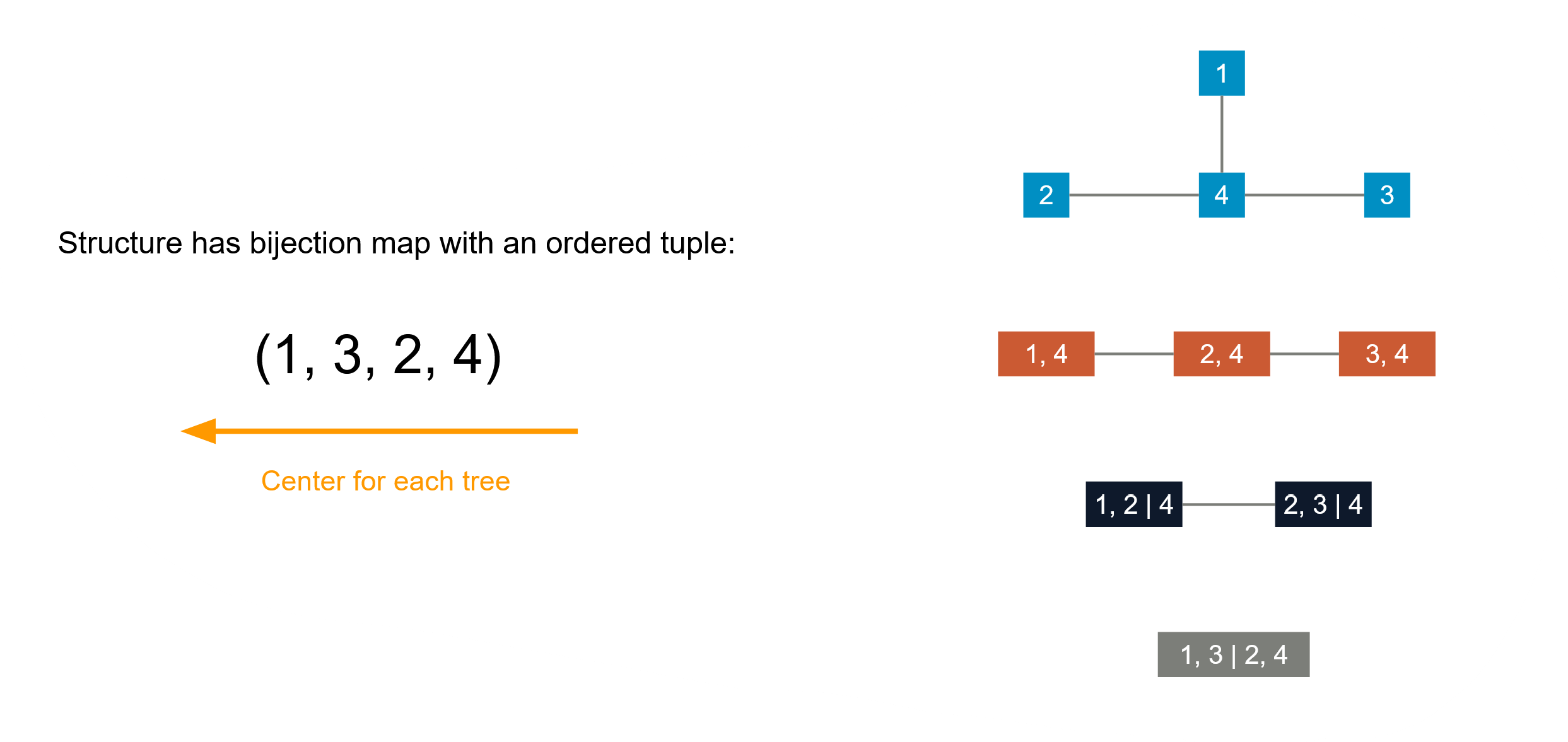

For a C-vine, it must be an R-vine at first, but for every layer of the tree there is a center component. This structure makes it possible to represent the C-vine structure using an ordered tuple: reading backwards, each number represents the centered component at each level of the tree.

For a D-vine, each tree is a path: there is one road that goes through all the nodes. It can also be represented as an ordered tuple. For example ![]() means the 1st layer of the tree is 1-2-3-4, and following the D-vine structure consensus, the next layer of the tree and thus the D-vine is determined.

means the 1st layer of the tree is 1-2-3-4, and following the D-vine structure consensus, the next layer of the tree and thus the D-vine is determined.

Fig 3: C-vine and its ordered tuple representation. In some literature, for example in [Czado et al. (2012)] it is written reversely as (4, 2, 3, 1)

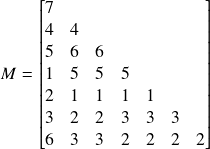

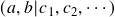

The representation of a generic R-vine is slightly more complicated, and requires a lower triangular matrix (some places use an upper triangular matrix for representation, and they are indeed identical). Let’s look at the following R-vine with 7 random variables for example:

Fig 4: An R-vine tree. Picture from Dissmann, J., Brechmann, E.C., Czado, C. and Kurowicka, D., 2013. Selecting and estimating regular vine copulae and application to financial returns. Computational Statistics & Data Analysis, 59, pp.52-69.

The R-vine tree in Fig 4 can be represented by the following matrix:

The R-vine structure is mapped to the matrix as follows:

- Find a diagonal term

. For example

. For example  .

. - Follow that column downwards and stop somewhere

, say we stop at

, say we stop at  .

. - The leftover terms below

in that column are then conditions

in that column are then conditions  . In this example we have

. In this example we have  left.

left. - The node

is in the R-vine. In this example,

is in the R-vine. In this example,  is at

is at  .

.

Working from the top of the tree you can also map the matrix back to a full R-vine. (Left as an exercise to the reader.) Sometimes in literature you will see the same structure represented by the triangular matrix below:

Vine Copula Type Features

For ![]() many variables, it can be shown that there are

many variables, it can be shown that there are ![]() many ways to construct an R-vine, which is a very large number for large

many ways to construct an R-vine, which is a very large number for large ![]() . For application purposes, due to the sheer amount of possible R-vine structures it makes sense to further impose some structures for model interpretability and to avoid overfitting.

. For application purposes, due to the sheer amount of possible R-vine structures it makes sense to further impose some structures for model interpretability and to avoid overfitting.

Fig 5: C-vine and D-vine tree. Picture from Brechmann, E. and Schepsmeier, U., 2013. Cdvine: Modeling dependence with c-and d-vine copulas in r. Journal of statistical software, 52(3), pp.1-27.

C-vine and D-vine are the most commonly used ones in practice for dependence modeling. C-vine due to its star-like structure, is useful when we have a key variable that largely governs the variable interactions in the data. This is the case when we trade, say 3 stocks against 1 key stock, as described in [Stübinger et al., 2018]. D-vine may be more beneficial when we do not wish to have a key node that controls the dependencies. Note that we are speaking in the direction of choosing a model based on our intrinsic belief on the data.

Also, derived from their idiosyncratic structures, C-vine reflects the belief of ordering variables by importance measured by strength of dependency. This is a confusing sentence but it is easy to understand by an example, for the C-vine described in Fig. 5 represented by an ordered tuple ![]() , variable 1 is the center at the level 1 of the tree and subsequently all other levels, therefore it is the most “important” and loosely speaking, everything else is dependent on it. Variable 2 starts to become important from level 2 and so on so forth. The order of (un)-importance is thus represented by

, variable 1 is the center at the level 1 of the tree and subsequently all other levels, therefore it is the most “important” and loosely speaking, everything else is dependent on it. Variable 2 starts to become important from level 2 and so on so forth. The order of (un)-importance is thus represented by ![]() .

.

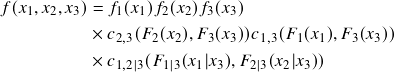

D-vine is in general considered more flexible than C-vine because there is no need to specify a centered variable. This might be beneficial if the random variables we try to model share an underlying symmetrical structure. In Fig. 5, the D-vine can be represented by an ordered tuple ![]() (Note

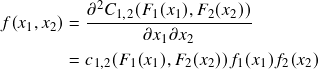

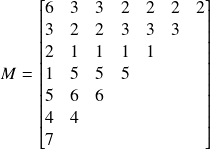

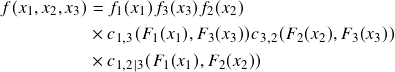

(Note ![]() also works). It has a strong sequential structure, due to the fact that to model two variables, it needs to condition on all the variables in between. For instance, in the example pictured in Fig. 5, to calculate bivariate copula density between variable 1 and variable 4, it needs to condition on variable 2 and 3. Another common assumption (though not needed, strictly speaking) used when constructing D-vine is that the copula density does not condition on specific values of the conditioning variables. For example, in Fig. 1 we can write the D-vine density decomposition as below

also works). It has a strong sequential structure, due to the fact that to model two variables, it needs to condition on all the variables in between. For instance, in the example pictured in Fig. 5, to calculate bivariate copula density between variable 1 and variable 4, it needs to condition on variable 2 and 3. Another common assumption (though not needed, strictly speaking) used when constructing D-vine is that the copula density does not condition on specific values of the conditioning variables. For example, in Fig. 1 we can write the D-vine density decomposition as below

(6)

That is, when we estimate the bivariate copula ![]() , we treat it as

, we treat it as ![]() . This is done to make the model tractable. Note that this is a big deal, since it greatly simplifies our workload because

. This is done to make the model tractable. Note that this is a big deal, since it greatly simplifies our workload because ![]() , once fit, is only a function of 2 variables instead of 3. Note that we are talking about features specific to C-vine and D-vine models in general, and those logically may have nothing to do with our original data, though we hope that the original data resemble those features as well.

, once fit, is only a function of 2 variables instead of 3. Note that we are talking about features specific to C-vine and D-vine models in general, and those logically may have nothing to do with our original data, though we hope that the original data resemble those features as well.

Calculating Important Quantities

For ease of demonstration, we assume the number of random variables ![]() .

.

Note that no matter what specific R-vine structure we assume, we can always calculate the probability density ![]() . And therefore we can derive the conditional (cumulative) density and the overall cumulative density from the probability density by integrations.

. And therefore we can derive the conditional (cumulative) density and the overall cumulative density from the probability density by integrations.

Probability Density

The probability density ![]() can be directly derived from the vine copulas structure by walking through the tree, since all the bivariate copulae and the marginal densities are known. This quantity is the building block for other important quantities.

can be directly derived from the vine copulas structure by walking through the tree, since all the bivariate copulae and the marginal densities are known. This quantity is the building block for other important quantities.

Also, the density calculated often is in regard to the variables’ quantiles (pseudo-observations) and it is denoted as

![]()

This is okay since when we fit the vine copulas in practice, we were using pseudo-observations, and therefore the marginals are all uniform in ![]() anyway.

anyway.

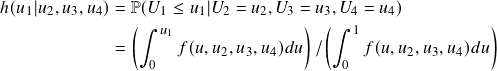

Conditional Probability

This quantity needs to be calculated numerically by integrating the target variable:

(7)

This quantity is especially useful for trading, since it indicates whether a stock is overpriced or underpriced historically, compared to other stocks in this cohort. In “traditional” copula models, this quantity is computed from top down, taking partial differentiation from cumulative densities. Here it is computed from bottom up, taking numerical integration from the probability density.

Cumulative Density

When dealing with “traditional” copula models, this is from the definition, and is thus usually the easiest one to be computed. In vine copula models this is the hardest one in contrast, because vine copula starts from probability densities, not from cumulative densities. Hence this quantity needs to be numerically integrated across the hypercube ![]() . Often people use Monte-Carlo integration for this computation. This quantity is not used as often in trading applications.

. Often people use Monte-Carlo integration for this computation. This quantity is not used as often in trading applications.

Sample and Fitting

Those are very interesting topics but are beyond our scope here due to complexity. Interested readers can refer to [Dißmann et al. 2012] for more details. This is an active research field and involves a lot of heavy lifting. Fundamentally, an algorithm needs to be designed to find a suited vine structure, and also determine the copula type and parameters at each node on the tree. This is thus a high dimensional model fitting problem. Luckily, a lot of great algorithms are already nicely black-boxed, handled automatically by software. Specifically, sample generation is a pretty much solved problem, whereas determine the best R-vine structure given data and determine all copula types and parameters are still discussed, but some algorithms are available. Thus, sampling is very fast, and fitting can be slow. To sum it up qualitatively:

- When working with copula models, whether it is a “traditional” copula model or vine copula, we always fit the pseudo-observations, i.e., quantiles data, instead of the real data.

- For fitting a vine copula, we need to determine the best tree structure that reflects the data, along with specifying the type of bivariate copulas and their parameters. The amount of calculation is huge.

Given data and a specific vine copula, for an evaluation, we can always calculate the log-likelihood, and consequently AIC, BIC, and compare those results.

Comments

Vine copula grants great flexibility in dependence modeling among multiple random variables. Specifically for trading, it provides a valid approach to capture higher dimensional statistical arbitrage, which is harder to come up intuitively with by non-quant traders. It also has other applications especially in risk management. Once one can wrap the head around the concepts, vine copula is a model with great interpretability. We will talk about their applications in trading in another article.

Check out our lecture on the topic:

References

- Czado, C., Schepsmeier, U. and Min, A., 2012. Maximum likelihood estimation of mixed C-vines with application to exchange rates. Statistical Modelling, 12(3), pp.229-255.

- Dissmann, J., Brechmann, E.C., Czado, C. and Kurowicka, D., 2013. Selecting and estimating regular vine copulae and application to financial returns. Computational Statistics & Data Analysis, 59, pp.52-69.

- Hofmann, M. and Czado, C., 2010. Assessing the VaR of a portfolio using D-vine copula based multivariate GARCH models. Preprint.

- Joe, H. and Kurowicka, D. eds., 2011. Dependence modeling: vine copula handbook. World Scientific.

- Kim, D., Kim, J.M., Liao, S.M. and Jung, Y.S., 2013. Mixture of D-vine copulas for modeling dependence. Computational Statistics & Data Analysis, 64, pp.1-19.

- Kraus, D. and Czado, C., 2017. D-vine copula based quantile regression. Computational Statistics & Data Analysis, 110, pp.1-18.

- Stübinger, J., Mangold, B. and Krauss, C., 2018. Statistical arbitrage with vine copulas. Quantitative Finance, 18(11), pp.1831-1849.

- Sukcharoen, K. and Leatham, D.J., 2017. Hedging downside risk of oil refineries: A vine copula approach. Energy Economics, 66, pp.493-507.

- Yu, R., Yang, R., Zhang, C., Špoljar, M., Kuczyńska-Kippen, N. and Sang, G., 2020. A Vine Copula-Based Modeling for Identification of Multivariate Water Pollution Risk in an Interconnected River System Network. Water, 12(10), p.2741.