Mean-Reverting Spread Modeling: Caveats in Calibrating the OU Process

by Hansen Pei

Join the Reading Group and Community: Stay up to date with the latest developments in Financial Machine Learning!

This is a series where we aim to cover in detail various aspects of the classic Ornstein-Uhlenbeck (OU) model and the Ornstein-Uhlenbeck Jump (OUJ) model, with applications focusing on mean-reverting spread modeling under the context of pairs trading or statistical arbitrage. Given the universality and popularity of those models, the techniques discussed can easily be applied to other areas where the OU or OUJ model seems fit.

Introduction

In this article, we aim to dive into the classic OU model, and illustrate the most common tasks encountered in applications:

1. How to generate an OU process.

2. Caveats in fitting an OU process.

The stochastic differential equation for an OU process is as follows:

![]()

where ![]() is the mean-reverting speed,

is the mean-reverting speed, ![]() is the long-term average and where the process reverts to,

is the long-term average and where the process reverts to, ![]() is a standard Wiener/Brownian process and

is a standard Wiener/Brownian process and ![]() is the scaling for it (therefore the standard deviation for the Brownian part). Here

is the scaling for it (therefore the standard deviation for the Brownian part). Here ![]() are positive constants, though in more generalized models they could vary with time.

are positive constants, though in more generalized models they could vary with time.

The OU process is probably the simplest continuous-time mean-reverting model. Due to its simplicity, it is widely used across many fields in quantitative finance such as risk management, interest rate model, and pairs trading. It seems like a well-studied field that most problems are solved (like linear regression or linear algebra). However, lots of the common problems practitioners face are only recently treated with rigor, or not dealt with at all.

We start by presenting Doob’s exact method for generating an OU process. Then, we demonstrate that when one tries to fit an OU process, as we will see, the mean-reversion speed is in general notoriously difficult to estimate correctly.

Generating OU Process

Monte-Carlo methods are heavily used in analyzing stochastic processes and thus it is in general helpful to know how to simulate OU processes. The Monte-Carlo simulation also helps us understand the properties of the OU process itself, and they are heavily tied to the model’s parameter calibration as well, as we will see later.

Euler Scheme and Evaluating Stochastic Integral

Generally speaking, there are two ways for generating an OU process: Using an Euler advancement scheme (the Euler-Maruyama discretization) or using Doob’s exact simulation method. The former method is self-evident and quick to implement by directly looking at the OU process SDE. This is sure to introduce discretization errors (it is widely used anyway due to simplicity nevertheless).

An alternative approach is by looking at the analytical solution from the OU process:

![]()

Only the last stochastic integral term is not deterministic. This integral when evaluated discretely, for example in the Ito’s sense (evaluate at the start of each integral), introduces error. One can divide the interval ![]() finely for less discretization error, but this increases computation time.

finely for less discretization error, but this increases computation time.

Also, generally one wishes to get a series of values ![]() where

where ![]() is on a grid:

is on a grid: ![]() . The deterministic part can be pre-computed to save time, and pre-computation results in less discretization error, since the deterministic part of

. The deterministic part can be pre-computed to save time, and pre-computation results in less discretization error, since the deterministic part of ![]() can be computed exactly. For the stochastic past, if the difference

can be computed exactly. For the stochastic past, if the difference ![]() is small enough, one may simply approximate the stochastic integral (in the Ito’s sense) in one step by:

is small enough, one may simply approximate the stochastic integral (in the Ito’s sense) in one step by:

![]()

with ![]() .

.

Exact Simulation

This method is originally introduced and proved in [Doob (1942)]. For ![]() we look at the analytical solution:

we look at the analytical solution:

![]()

Reading from the above equation, we can treat ![]() as a random variable. Given

as a random variable. Given ![]() , Doob proved the convenient result that

, Doob proved the convenient result that ![]() is normally distributed with the mean being the deterministic part

is normally distributed with the mean being the deterministic part

![]()

and the variance coming from the random part

![]()

Therefore, knowing ![]() , to simulate

, to simulate ![]() exactly, we have

exactly, we have

![]()

with ![]() . Notice this scheme can be computed as fast as the simple Euler-Maruyama discretization, just swap the Brownian increment at each step

. Notice this scheme can be computed as fast as the simple Euler-Maruyama discretization, just swap the Brownian increment at each step

![]()

And we can see that the discretization error comes from approximating ![]() by

by ![]() , because it is only first-order accurate. The increment in simulation accuracy is huge. And practitioners should seriously consider the exact method.

, because it is only first-order accurate. The increment in simulation accuracy is huge. And practitioners should seriously consider the exact method.

Estimating Parameters

There are three constants to estimate: the mean-reverting speed ![]() , the long-term average

, the long-term average ![]() , and the standard deviation

, and the standard deviation ![]() . In general,

. In general, ![]() and

and ![]() can be estimated relatively accurately with a reasonably large amount of observations (more than 250).

can be estimated relatively accurately with a reasonably large amount of observations (more than 250).

However estimating ![]() accurately is notoriously difficult, even with a large number of observations (more than 10000, that is more than one month of 1-min data for stocks). For pairs-trading applications, the mean-reverting speed is highly related to the profitability of strategies: We want

accurately is notoriously difficult, even with a large number of observations (more than 10000, that is more than one month of 1-min data for stocks). For pairs-trading applications, the mean-reverting speed is highly related to the profitability of strategies: We want ![]() to be large so that there are more mean-reverting behaviors. Moreover, a lot of analyses are based on the half-life of mean reversion, which for an OU process is just

to be large so that there are more mean-reverting behaviors. Moreover, a lot of analyses are based on the half-life of mean reversion, which for an OU process is just

![]()

Methods for Parameter Estimation

AR(1) Approach

The most common approach is to treat the discrete data from an OU process as an AR(1) process and estimate the two parameters accordingly. The AR(1) process with constant time advancement ![]() is

is

![]()

Therefore one can simply conduct a linear regression to find ![]() ,

, ![]() and

and ![]() . Rewrite the above equation into the following form to compare with the OU parameters:

. Rewrite the above equation into the following form to compare with the OU parameters:

![]()

We have the estimated OU parameters as follows:

![]()

Here, ![]() is the mean squared error of the residuals from the OLS. Since we are using OLS, we can directly look at the analytical solution for the coefficient

is the mean squared error of the residuals from the OLS. Since we are using OLS, we can directly look at the analytical solution for the coefficient ![]() :

:

![]()

And we can do slightly better by using the max likelihood estimator instead [Tang and Chen (2009)]:

![]()

For the result, usually, there is not much difference compared to the direct AR(1) approach by choosing the max likelihood estimator, because the original data and its lag-1 are extremely similar, and ![]() . Therefore

. Therefore ![]() .

.

AR(1) Approach with Known Mean

When the long-term mean of the process is known, the AR(1) approach’s result can be enhanced:

![]()

The other 2 variables are the same as above. Readers familiar with simulations may already observe that this estimator comes from the exact simulation discussed above. When modeling a mean-reverting spread from a pair of prices, typically the long-term mean is 0. Therefore this assumption is not far-fetched.

The exact distribution of ![]() is studied in [Bao et al. (2015)] under various realistic conditions: known or unknown

is studied in [Bao et al. (2015)] under various realistic conditions: known or unknown ![]() , fixed or random initial value, etc. For realistic applications where samples are always finite, this estimator always has a positive bias. When applied to pairs trading, it means this method in general overestimates the mean-reverting frequency in any given period. We will discuss the bias in a later part of this article.

, fixed or random initial value, etc. For realistic applications where samples are always finite, this estimator always has a positive bias. When applied to pairs trading, it means this method in general overestimates the mean-reverting frequency in any given period. We will discuss the bias in a later part of this article.

Direct Max-Likelihood

Still, assume ![]() . Stübinger et al. (2017) utilize the following method that directly uses the maximum likelihood of

. Stübinger et al. (2017) utilize the following method that directly uses the maximum likelihood of ![]() conditioned on the (sequential) data. First, we directly look at the exact discretization of the OU process:

conditioned on the (sequential) data. First, we directly look at the exact discretization of the OU process:

![]()

Again, this uses Doob’s result, and we have ![]() distributing normally with mean

distributing normally with mean ![]() and standard deviation

and standard deviation ![]() . That is, the conditional density given

. That is, the conditional density given ![]() is a normal distribution:

is a normal distribution:

![]()

We now compute the sum of log-likelihood (in the sequential sense) given our sample data:

![]()

Then one can use a suitable optimization algorithm to maximize ![]() , for example by gradient descent or L-BFGS-B. This approach is in general quite slower than the AR(1)-based methods discussed above, and under a pure OU process, it produces identical results to the AR(1) estimator with a known mean.

, for example by gradient descent or L-BFGS-B. This approach is in general quite slower than the AR(1)-based methods discussed above, and under a pure OU process, it produces identical results to the AR(1) estimator with a known mean.

Moment Estimation Approach

This is adapted from a method proposed by Holý and Tomanová (2018): Take a look at the Doob’s exact discretization of the continuous OU process

![]()

From Doob’s proof for the exact discretization, and also assume ![]() , it turned out that

, it turned out that ![]() is normally distributed with the following moments (all are unconditioned, because of our convenient choice of

is normally distributed with the following moments (all are unconditioned, because of our convenient choice of ![]() .):

.):

(1) ![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}[X_t] &= \mu \\ \text{Var}[X_t] &= \frac{\sigma^2}{2\theta} \\ \text{Covar}[X_t, X_s] &= \frac{\sigma^2}{2\theta} e^{-\theta|t-s|}, \quad t \neq s. \end{align*}](https://hudsonthames.org/wp-content/ql-cache/quicklatex.com-7d3f96b9531c44a0cd24fc0a91884c64_l3.png)

Assume we know the true mean ![]() , then we can calculate the estimator:

, then we can calculate the estimator:

![]()

where we need to use unbiased estimators for the variance and covariance. After some algebra work we arrive at (note that we have ![]() total instances):

total instances):

![]()

You will be surprised at how much difference this adjustment makes for not-so-large ![]() . Since the max-likelihood estimator always has a positive bias, this adjustment makes the bias less pronounced by effectively subtracting a positive term

. Since the max-likelihood estimator always has a positive bias, this adjustment makes the bias less pronounced by effectively subtracting a positive term ![]() from it. Also

from it. Also ![]() and

and ![]() are in general at the same order of magnitude, making this term not negligible.

are in general at the same order of magnitude, making this term not negligible.

At this stage, the reader might ask whether we are splitting hairs, or are just playing with the regression coefficients from the OLS. Indeed, if we have infinite samples, all of the above four methods (practically only three are different) coincide as one should expect, and they do not have any bias. However, for finite samples, they result in very different bias profiles, especially when ![]() is small.

is small.

Can We Do Better?

Well, if we know the data do come from a pure OU process (no added noise, no jumps, etc.), it is quite challenging to form an estimator for ![]() that can outperform those estimators. They all directly look at the distributions at each time step of the OU process by taking full advantage of its normal distribution and extracts the coefficients from there.

that can outperform those estimators. They all directly look at the distributions at each time step of the OU process by taking full advantage of its normal distribution and extracts the coefficients from there.

For improvements, one needs to look beyond the AR(1) and the moment method. To the best of our knowledge, the indirect inference estimation is unbiased for finite samples. However, this method is simulation-based and is relatively slow. Interested readers can refer to [Phillips and Yu, (2008)] for details.

Asymptotic Distributions of Theta Estimator

The estimator ![]() from the AR(1) method with known mean is the most thoroughly studied one in mathematical statistics. It coincides with the direct max-likelihood estimator. Since

from the AR(1) method with known mean is the most thoroughly studied one in mathematical statistics. It coincides with the direct max-likelihood estimator. Since ![]() can be quite inaccurate (in the sense of bias and variance), one may want to take a step back and look at the full distribution of

can be quite inaccurate (in the sense of bias and variance), one may want to take a step back and look at the full distribution of ![]() to quantify the uncertainty. [Bao et al. (2015)] found the exact distributions of this estimator. However, the details are rather technical and we will skip those for this introductory post. One without access to the analytical solution can directly use Monte-Carlo to generate the distribution with reasonable speed. Let’s look at some of the asymptotic distributions listed in [Zhou and Yu (2015)] [Bao et al. (2015)] for different scenarios. Assume the true

to quantify the uncertainty. [Bao et al. (2015)] found the exact distributions of this estimator. However, the details are rather technical and we will skip those for this introductory post. One without access to the analytical solution can directly use Monte-Carlo to generate the distribution with reasonable speed. Let’s look at some of the asymptotic distributions listed in [Zhou and Yu (2015)] [Bao et al. (2015)] for different scenarios. Assume the true ![]() , and we have the discrete data of an OU process in time

, and we have the discrete data of an OU process in time ![]() with constant increment

with constant increment ![]() . The last case for fixed

. The last case for fixed ![]() has relatively complicated expressions, and interested readers can refer to the original works.

has relatively complicated expressions, and interested readers can refer to the original works.

- ⠀

,

,  fixed

fixed ,

,

fixed,

fixed,

- See the original works

Unknown

Unknown

- See the original works

Thus, whether ![]() is

is ![]() or not, asymptotically for infinite

or not, asymptotically for infinite ![]() , we do get unbiased estimations, but even with the best case where

, we do get unbiased estimations, but even with the best case where ![]() ,

, ![]() , the standard deviation of

, the standard deviation of ![]() only decreases by

only decreases by ![]() . The convergence is quite slow. However, when

. The convergence is quite slow. However, when ![]() is fixed, even if we let

is fixed, even if we let ![]() , we still have a bias in the estimator. This makes sense, because, say we have only 2 days of data from a spread, and the spread has its half-life of mean reversion being a month, the information we get within these 2 days is very limited, no matter how much high-frequency data we have access to for this pair of instruments.

, we still have a bias in the estimator. This makes sense, because, say we have only 2 days of data from a spread, and the spread has its half-life of mean reversion being a month, the information we get within these 2 days is very limited, no matter how much high-frequency data we have access to for this pair of instruments.

Estimating the Bias of Theta Estimate

Constructing the exact analytical solution from [Bao et al. (2015)] can be a quite involving exercise even given the manual, as it requires an understanding of joint characteristic functions and details in the numerical integration of complex functions. Yu (2009) investigated a quick way to approximate the bias with second order-accuracy:

![]()

where ![]() . Unfortunately to the best of our effort, we are unable to fully replicate this result at low values of

. Unfortunately to the best of our effort, we are unable to fully replicate this result at low values of ![]() , since the result is extremely sensitive to the underlying assumptions.

, since the result is extremely sensitive to the underlying assumptions.

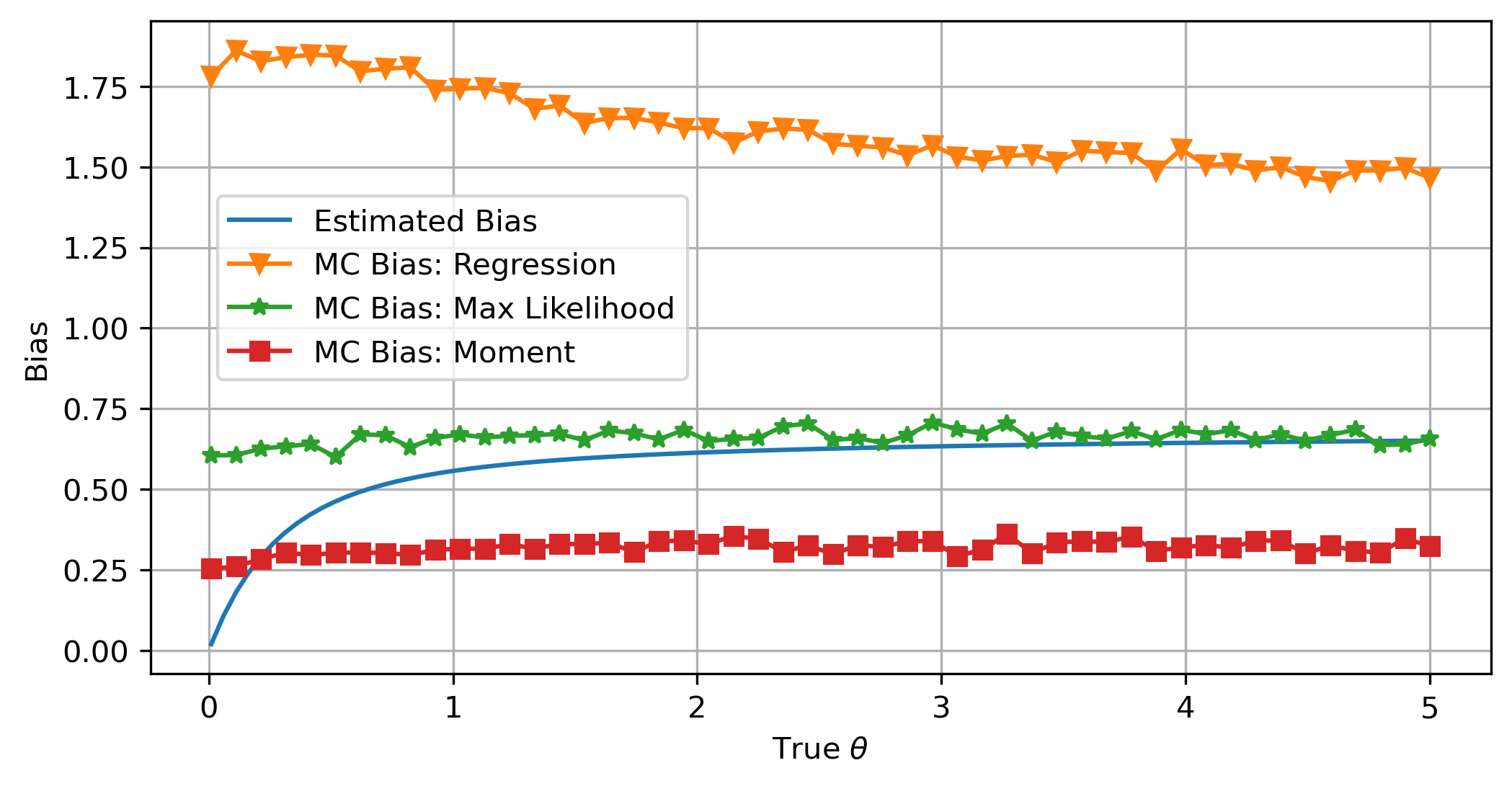

Below we compare the bias generated from the three different estimation methods of ![]() . Each plotted point is an average of 10000 Monte-Carlo results. We take

. Each plotted point is an average of 10000 Monte-Carlo results. We take ![]() ,

, ![]() , and

, and ![]() to emulate having access to 3 years of daily data. As we can see, the more information we have, the less the bias: In the regression method, when we do not know the mean, it has the largest bias. When we know the mean is

to emulate having access to 3 years of daily data. As we can see, the more information we have, the less the bias: In the regression method, when we do not know the mean, it has the largest bias. When we know the mean is ![]() , the bias drops significantly. When we calculate the moments exactly, it has the lowest amount of bias.

, the bias drops significantly. When we calculate the moments exactly, it has the lowest amount of bias.

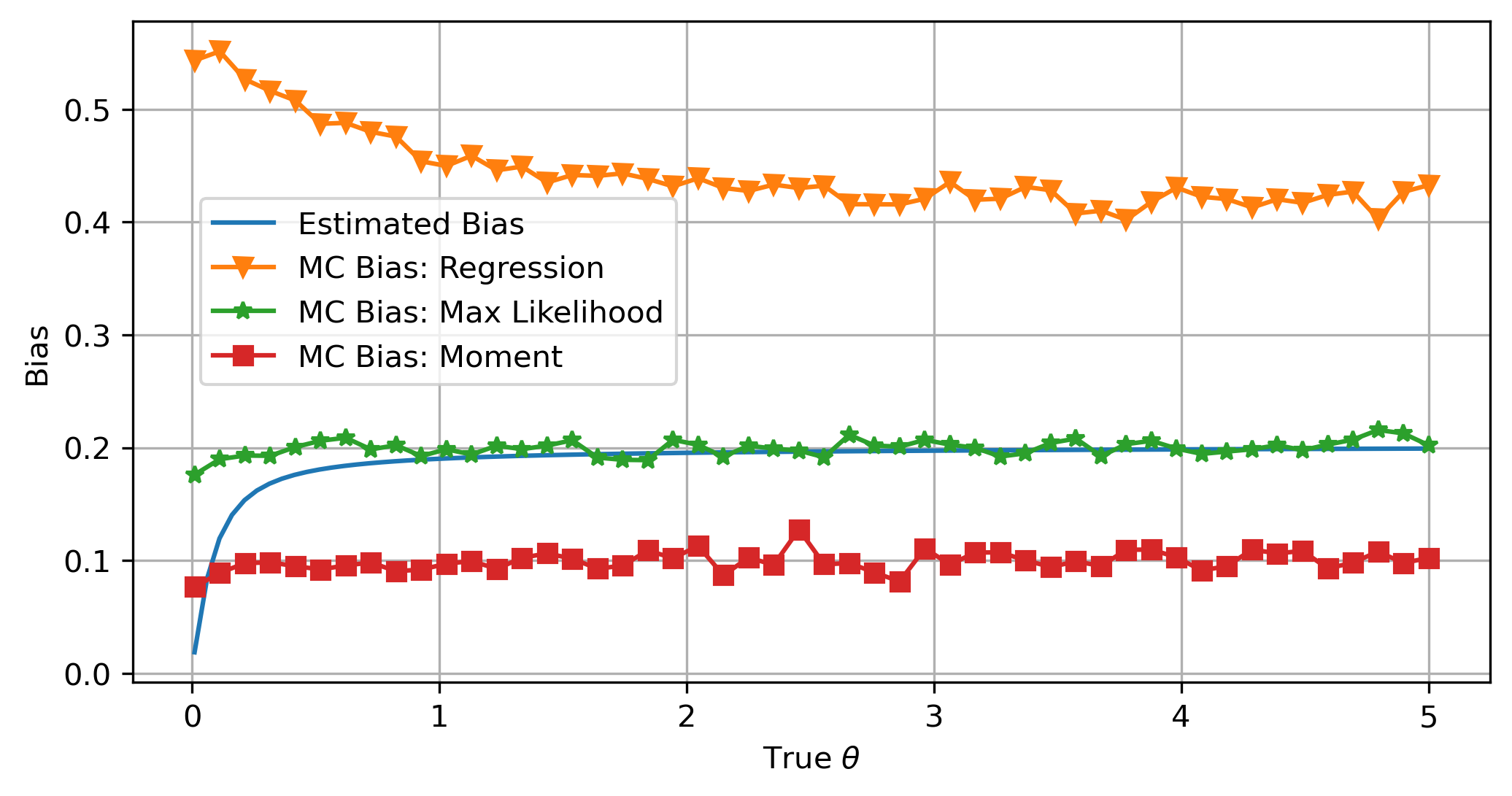

Now we assume ![]() ,

, ![]() , and

, and ![]() to emulate having access to 10 days of 1-min data. We see that with an effectively larger time span, the overall bias decreases.

to emulate having access to 10 days of 1-min data. We see that with an effectively larger time span, the overall bias decreases.

Noises and Jumps

Financial data generally have lots of noise, and it may contain jumps. Those two can seriously influence our model calibration. Here we talk briefly go over the major effects of our calibration from those two phenomena, but the details are left for another dedicated post to discuss.

The OU process with added noise still satisfies the original OU model SDE, however, our observations are assumed to be contaminated by Gaussian noise ![]() :

:

![]()

In this case, the estimator ![]() and

and ![]() can have an infinite bias as

can have an infinite bias as ![]() . In the work of [Holý and Tomanová, (2018)], they discussed several methods that can handle the added noise.

. In the work of [Holý and Tomanová, (2018)], they discussed several methods that can handle the added noise.

When jumps are present, generally the process is modeled as an OU-Jump process:

![]()

where ![]() the jump size is normally distributed, and

the jump size is normally distributed, and ![]() is a Poisson arrival process. The jumps lead to significant fat tails in the distribution of

is a Poisson arrival process. The jumps lead to significant fat tails in the distribution of ![]() and as a result, the OU process data is no longer normally distributed. If we blindly apply the OU estimation methods, both

and as a result, the OU process data is no longer normally distributed. If we blindly apply the OU estimation methods, both ![]() and

and ![]() will severely overestimate their true value on average. Thus the jumps need to be filtered before applying the OU estimation. In the works of [Mai, (2012)], [Cartea and Figueroa, (2007)], [Cázares and Ivanovs, (2020)], several techniques for filtering the jumps are discussed in detail, and this seemingly straight-forward jump-filtering procedure is far from trivial.

will severely overestimate their true value on average. Thus the jumps need to be filtered before applying the OU estimation. In the works of [Mai, (2012)], [Cartea and Figueroa, (2007)], [Cázares and Ivanovs, (2020)], several techniques for filtering the jumps are discussed in detail, and this seemingly straight-forward jump-filtering procedure is far from trivial.

Monte-Carlo Results

Experiment 1

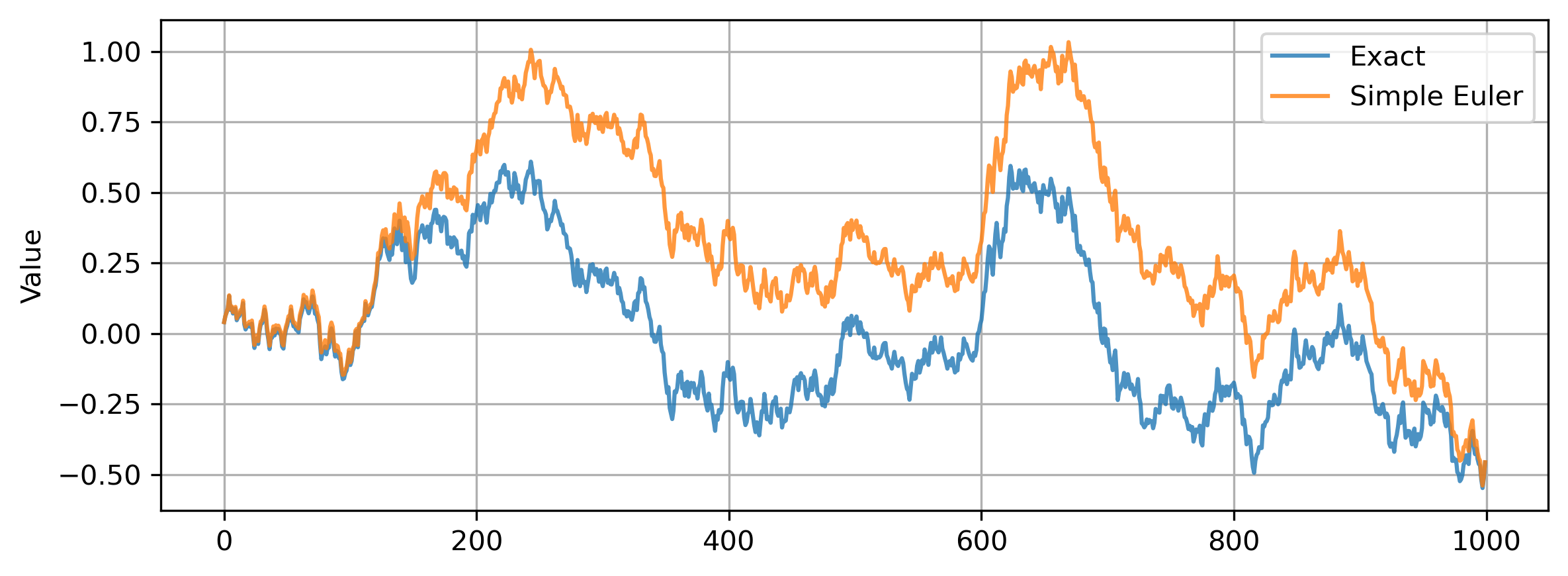

We compare the Euler-Maruyama discretization scheme with Doob’s exact simulation scheme. The two methods utilize the same Gaussian noise for direct comparison. The parameters used are: ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() . We can see that there is a noticeable difference. The order 1 discretization error accumulates because each step depends on its previous step.

. We can see that there is a noticeable difference. The order 1 discretization error accumulates because each step depends on its previous step.

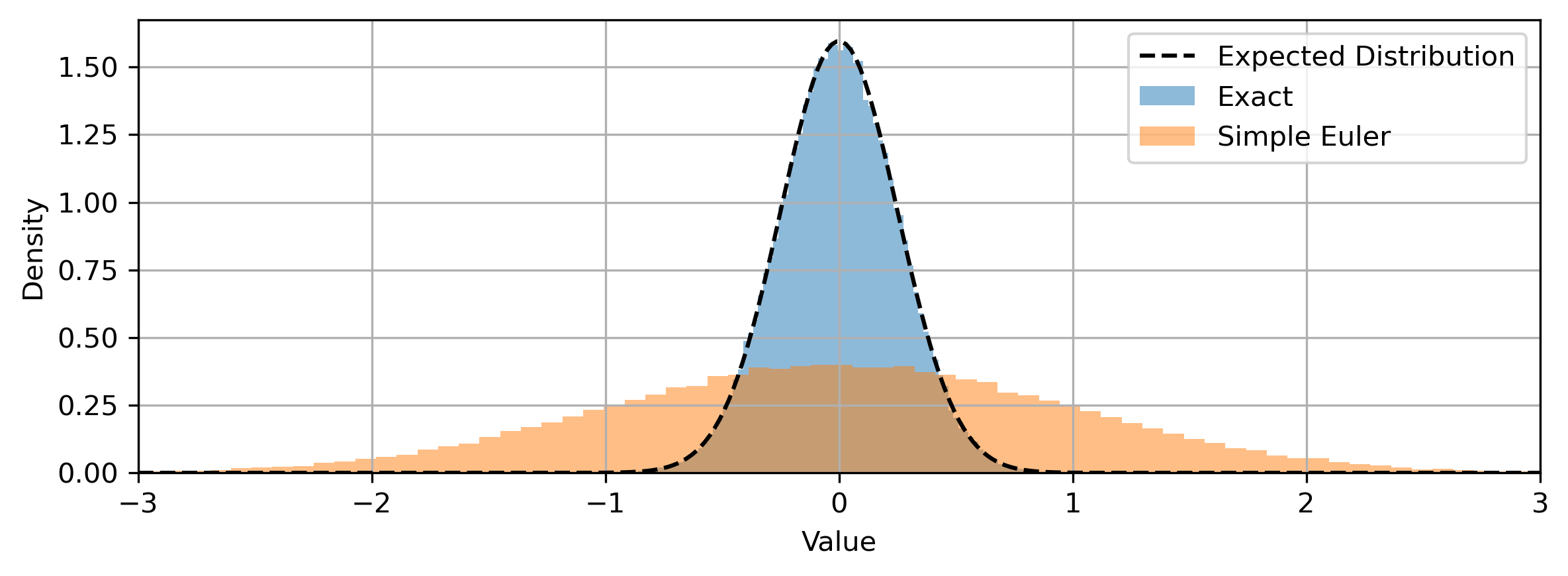

Now we look at their distributions at the end of the simulation:

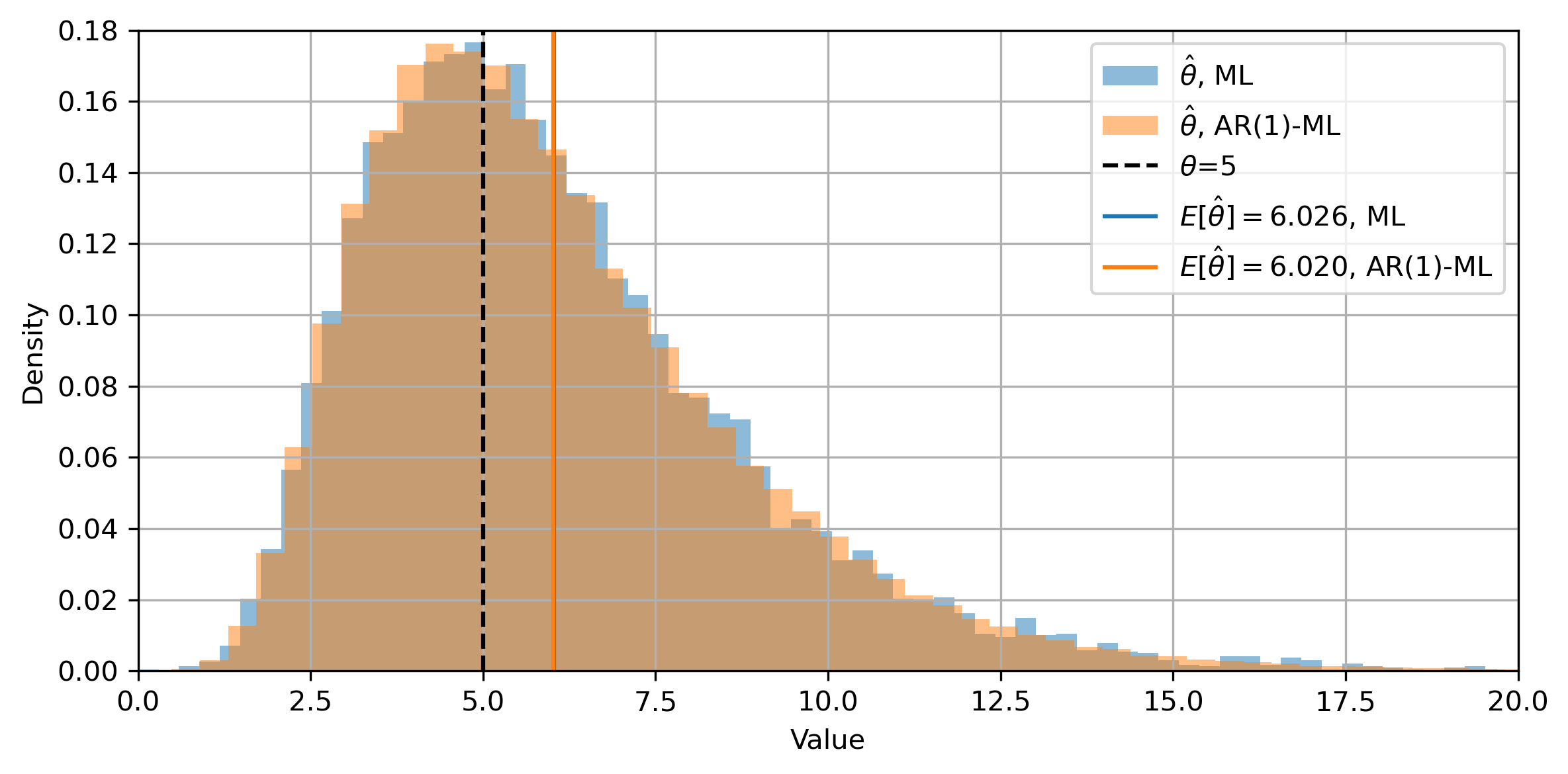

Experiment 2

For the rest of the experiments, We simulate the OU process exactly and use them to see the distribution of the estimator from AR(1) with a known mean. First, we use the direct ML estimation and compare it with the AR(1) method. The density plot is rather coarse because we only use 10000 Monte-Carlo paths for estimation for this method being relatively slow. The two estimations produce almost identical results.

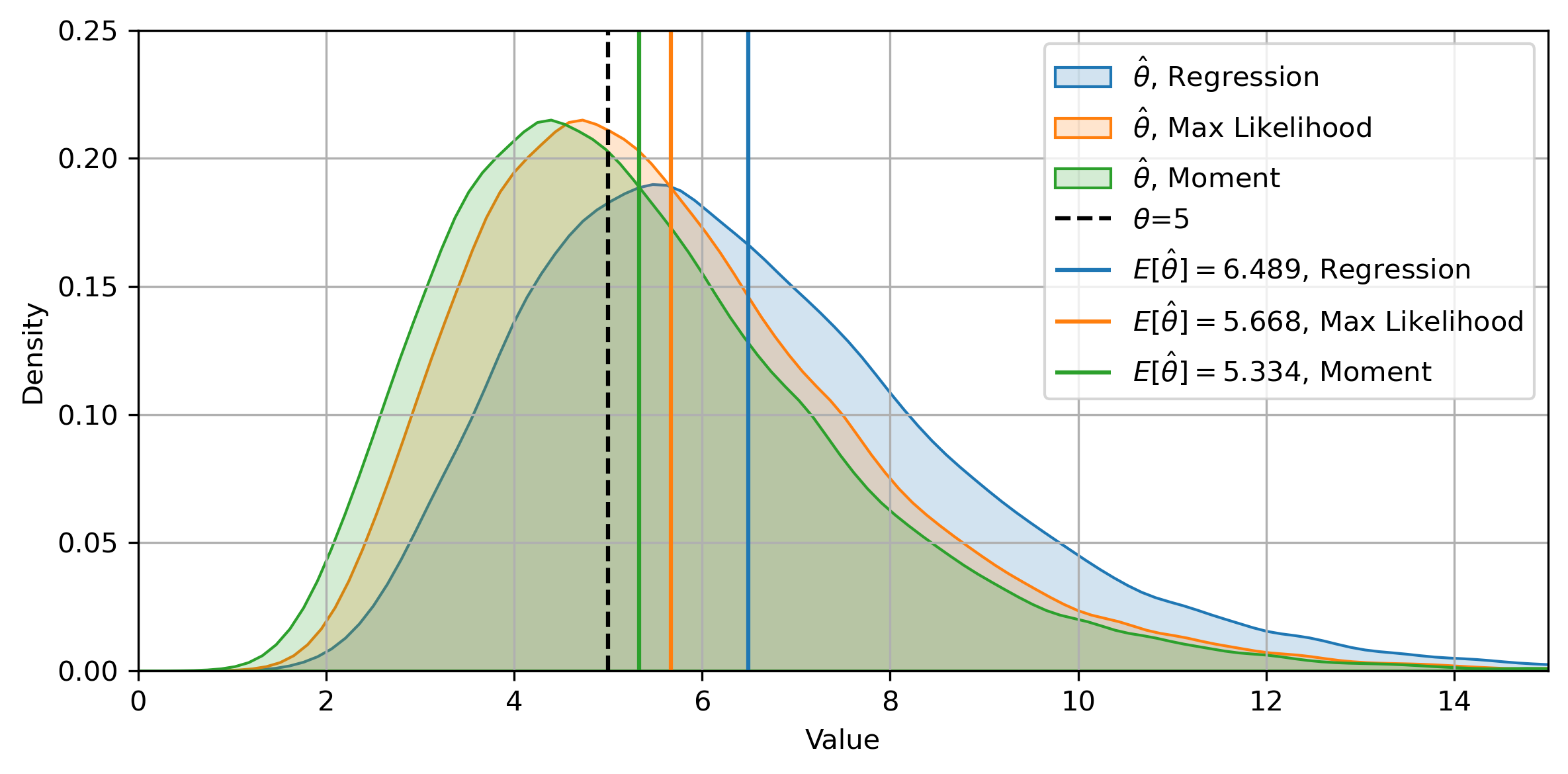

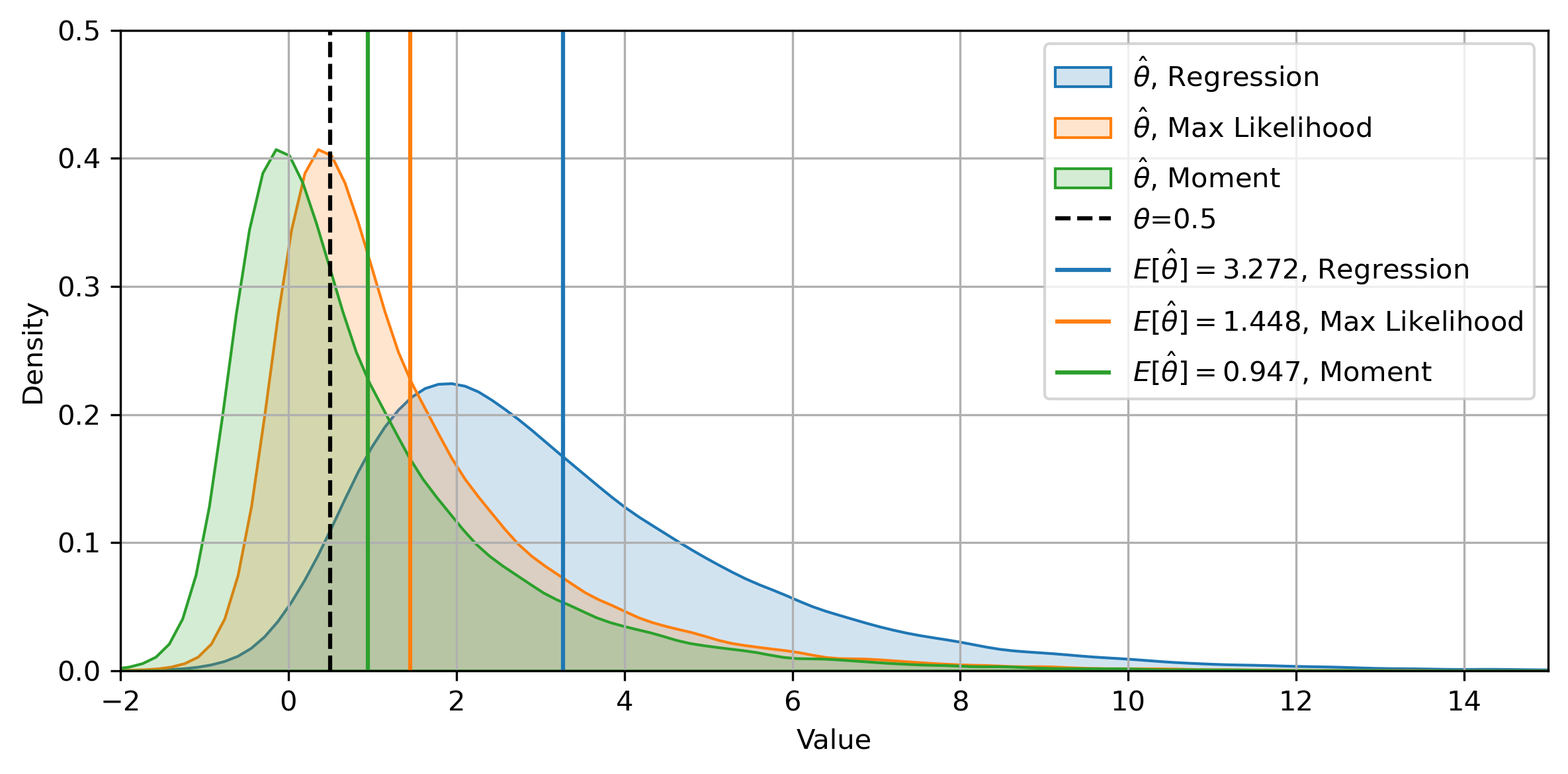

Experiment 3

We directly look at the distributions from the three different methods for large ![]() and small

and small ![]() . We change the KDE plot style for a clearer view. First, we have

. We change the KDE plot style for a clearer view. First, we have ![]() ,

, ![]() ,

, ![]() . This is one of the nicer cases and we can see the three methods mostly agree with each other.

. This is one of the nicer cases and we can see the three methods mostly agree with each other.

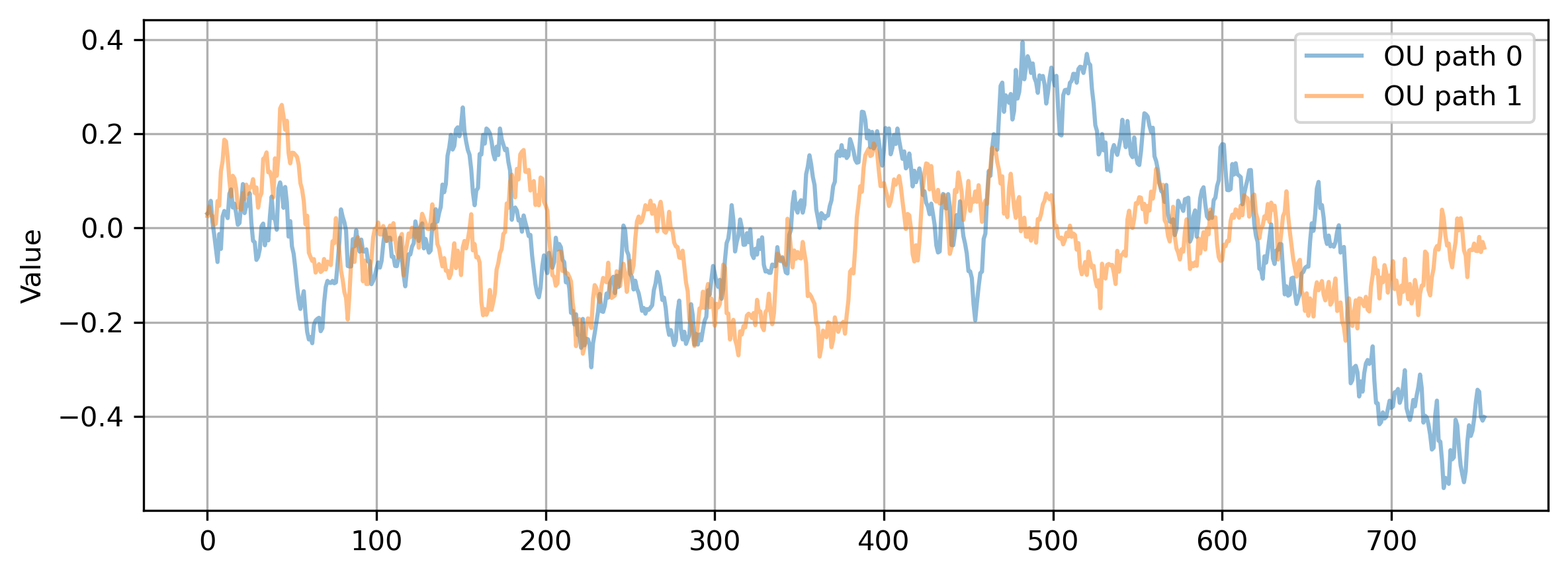

Here are some of the simulated OU paths. The half-life of mean reversion is relatively small.

Experiment 4

Then, we have ![]() ,

, ![]() ,

, ![]() . We can see that the relative bias grows larger, and we even have a great portion of our estimation such that

. We can see that the relative bias grows larger, and we even have a great portion of our estimation such that ![]() . Also, the discrepancies among the three methods enlarge by quite a lot. This further demonstrates the point that, when

. Also, the discrepancies among the three methods enlarge by quite a lot. This further demonstrates the point that, when ![]() is small, we should take the estimation with a grain of salt.

is small, we should take the estimation with a grain of salt.

Key Takeaways

Here are some of the major takeaways:

1. We can generate an OU process exactly at pretty much no extra cost.

2. The estimator for ![]() is biased and often has a large variance for AR(1)-based methods, and in principle, this is very difficult to improve. Since

is biased and often has a large variance for AR(1)-based methods, and in principle, this is very difficult to improve. Since ![]() is often overestimated, under a pairs trading scenario, the model is often too optimistic, because faster mean-reversion indicates more profit.

is often overestimated, under a pairs trading scenario, the model is often too optimistic, because faster mean-reversion indicates more profit.

3. The direct max-likelihood method produces identical results to the AR(1) method with a known mean using a max-likelihood estimator. But it is quite slower.

4. Two situations where we should not trust the estimations very much: 1. If the mean-reverting speed is small, or equivalently the half-life of mean-reversion is large (e.g. less than 20 crosses of mean in the timespan); 2. If the sample size is not large enough (e.g., less than 1 year of end-of-day data). Realistically in some scenarios, it is quite difficult to achieve either.

5. Estimations for ![]() and

and ![]() are in general much more reliable than

are in general much more reliable than ![]() .

.

6. Noises and jumps can further complicate the situation and need to be dealt with carefully.

References

- Bao, Y., Ullah, A. and Wang, Y., 2017. Distribution of the mean reversion estimator in the Ornstein–Uhlenbeck process. Econometric Reviews, 36(6-9), pp.1039-1056.

- Cartea, A. and Figueroa, M.G., 2005. Pricing in electricity markets: a mean reverting jump diffusion model with seasonality. Applied Mathematical Finance, 12(4), pp.313-335.

- Casella, B. and Roberts, G.O., 2011. Exact simulation of jump-diffusion processes with Monte Carlo applications. Methodology and Computing in Applied Probability, 13(3), pp.449-473.

- Doob, J.L., 1942. The Brownian movement and stochastic equations. Annals of Mathematics, pp.351-369.

- Grimmett, G. and Stirzaker, D., 2020. Probability and random processes. Oxford university press.

- Gloter, A., Loukianova, D. and Mai, H., 2018. Jump filtering and efficient drift estimation for Lévy-driven SDEs. The Annals of Statistics, 46(4), pp.1445-1480.

- Glasserman, P., 2013. Monte Carlo methods in financial engineering (Vol. 53). Springer Science & Business Media.

- Holý, V. and Tomanová, P., 2018. Estimation of Ornstein-Uhlenbeck Process Using Ultra-High-Frequency Data with Application to Intraday Pairs Trading Strategy. arXiv preprint arXiv:1811.09312.

- Mai, H., 2012. Drift estimation for jump diffusions.

- Meyer-Brandis, T. and Tankov, P., 2008. Multi-factor jump-diffusion models of electricity prices. International Journal of Theoretical and Applied Finance, 11(05), pp.503-528.

- Phillips, P.C. and Yu, J., 2009. Maximum likelihood and Gaussian estimation of continuous time models in finance. In Handbook of financial time series (pp. 497-530). Springer, Berlin, Heidelberg.

- Stübinger, J. and Endres, S., 2018. Pairs trading with a mean-reverting jump–diffusion model on high-frequency data. Quantitative Finance, 18(10), pp.1735-1751.

- Yu, J., 2012. Bias in the estimation of the mean reversion parameter in continuous time models. Journal of Econometrics, 169(1), pp.114-122.

- Zhou, Q. and Yu, J., 2010. Asymptotic distributions of the least squares estimator for diffusion processes.